I love tools that can create cool looking images out of piles of raw bits. An alternative title for this blog post could have been "A data block content similarity metric using LZ compression".

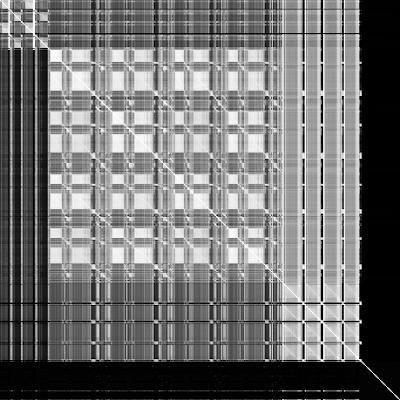

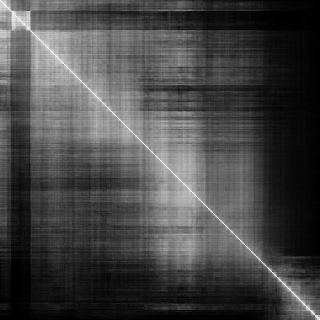

These images were created by a new tool I've been working on named "fileview", a file data visualization and debugging tool similar to Matt Mahoney's useful FV utility. FV visualizes string match lengths and distances at the byte level, while this tool visualizes the compressibility of each file block using every other block as a static dictionary. Tools like this reveal high-level file structure and the overall compressibility of each region in a file. These images were computed from single files, but it's possible to build them from two different files, too.

Each pixel represents the ratio of matched bytes for a single source file block. The block size ranges from 32KB-2MB depending on the source file's size. To compute the image, the tool compresses every block in the file against every other block, using LZ77 greedy parsing against a static dictionary built from the other block. The dictionary is not updated as the block is compressed, unlike standard LZ. Each pixel is set to 255*total_match_bytes/block_size.

The brighter a pixel is in this visualization, the more the two blocks being compared resemble each other in an LZ compression ratio sense. The first scanline shows how compressible each block is using a static dictionary built from the first block, the second scanline uses a dictionary from the 2nd block, etc.:

X axis = Block being compressed

Y axis = Static dictionary block

The diagonal pixels are all-white, which makes sense because a block LZ compressed using a static dictionary built from itself should be perfectly compressible (i.e. just one big match).

- High-resolution car image in BMP format:

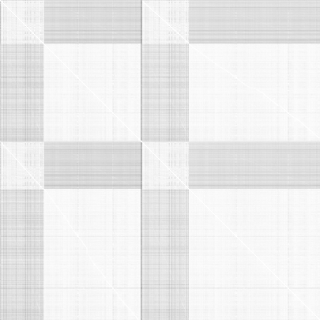

Source image in PNG format:

- An old Adobe installer executable - notice the compressed data block near the beginning of the file:

- enwik8 (XML text) - a highly compressible text file, so all blocks highly resemble each other:

- Test file (cp32e406.exe) from my corpus containing large amounts of compressed data with a handful of similar blocks:

- Hubble space telescope image in BMP format:

Source image in PNG format:

- Large amount of JSON text from a game:

- Unity demo screenshot in BMP format:

- English word list text file:

These images were created by a new tool I've been working on named "fileview", a file data visualization and debugging tool similar to Matt Mahoney's useful FV utility. FV visualizes string match lengths and distances at the byte level, while this tool visualizes the compressibility of each file block using every other block as a static dictionary. Tools like this reveal high-level file structure and the overall compressibility of each region in a file. These images were computed from single files, but it's possible to build them from two different files, too.

Each pixel represents the ratio of matched bytes for a single source file block. The block size ranges from 32KB-2MB depending on the source file's size. To compute the image, the tool compresses every block in the file against every other block, using LZ77 greedy parsing against a static dictionary built from the other block. The dictionary is not updated as the block is compressed, unlike standard LZ. Each pixel is set to 255*total_match_bytes/block_size.

The brighter a pixel is in this visualization, the more the two blocks being compared resemble each other in an LZ compression ratio sense. The first scanline shows how compressible each block is using a static dictionary built from the first block, the second scanline uses a dictionary from the 2nd block, etc.:

X axis = Block being compressed

Y axis = Static dictionary block

- High-resolution car image in BMP format:

Source image in PNG format:

- An old Adobe installer executable - notice the compressed data block near the beginning of the file:

- A test file from my data corpus that caused an early implementation of LZHAM codec's "best of X arrivals" parser to slow to an absolute crawl:

- enwik8 (XML text) - a highly compressible text file, so all blocks highly resemble each other:

- Test file (cp32e406.exe) from my corpus containing large amounts of compressed data with a handful of similar blocks:

- Hubble space telescope image in BMP format:

Source image in PNG format:

- Large amount of JSON text from a game:

- Unity46.exe:

- Unity demo screenshot in BMP format:

- English word list text file: