Here's a brain dump of the things that sometimes drive me crazy about OpenGL. (Note these are strictly my own opinions, not those of Valve or my coworkers. I'm also in a ranty-type mood today after grappling with OpenGL for several years now..) My major motivation to posting this: the GL API needs a reboot because IMO Mantle/D3D12 are going to most likely eat it for lunch soon, so we should start talking and thinking about this stuff now.

Some are minor issues, and some are specific to tracing the API, but all these issues add up to API "friction" that sometimes make it difficult to encourage other devs to get into the GL API or ecosystem:

1. 20 years of legacy, needs a reboot and major simplification pass

Circle the wagons around a core-style API only with no compatibility mode cruft.

Simplify, KISS principle, "if in doubt throw it out"!

Mantle and D3D12 are going to thoroughly leave GL behind (again!) on the performance and developer "mindshare" axes very soon.

Global context state and the binding pattern sucks. The DSA (direct state access)-style API should be standard/required.

Some bitter medicine/tough love: Most devs will take the easy path and port their PS4/Xbone rendering code to D3D12/Mantle. They will not bother to re-write their entire rendering pipeline to use super-aggressive batching, etc. like the GL community has been recently recommending to get perf up. GL will be treated like a second-class citizen and porting target until the API is modernized and greatly simplified.

2. GL context creation hell:

Creating modern GL contexts can be hair raisingly and mind numbingly tricky and incredibly error prone ("trampoline" contexts anyone?). The process is so error prone, and platform (and occasionally even driver) specific that I would almost always recommend to never go directly to the glX, wgl, etc. API's, and instead always use a library such as SDL or GLFW (and something like GLEW to retrieve the function/extension pointers).

The de-facto requirement to always pick from a small set of large 3rd party libraries just to get a real context rolling sucks. The API should be simplified and standardized so using a 3rd party lib shouldn't be a requirement just to get a real context going.

3. The thread's current context may be an implied "this" pointer:

Function pointers returned by GetProcAddress() cannot (or should not - depending on the platform!) be used globally because they may be strongly tied to the context ("context-dependent" vs. "context-independent" in GL-speak). In other words, calling GetProcAddress() on one context and using the returned func pointer on another is either bad form or just crashes.

So is GL a C API or not?

Can we just simplify and standardize all this cruft?

4. glGet() API deficiencies:

This is probably too tracing specific, but it impacts regular devs indirectly because if the tools suck or are non-existent because the API is hard to trace your life as a developer will be harder.

The glGet() series of API's (glGetIntegerv, glGetTexImage, etc.) don't have a "max_size" parameter, so it's possible for the driver to overwrite the passed in buffer depending on the passed in parameters or even the global context state. These functions should accept a "max_size" parameter and the functions should fail if the supplied max_size is too small, not overwrite memory.

Computing the exact size of texture buffers the driver will read or write depends on various global context state - bad bad bad.

There are hundreds of possible glGet() pname enum's, some accepted by only some drivers. If you're writing a tracer or some sort of debug helper, there is no official way to determine how many values will be written by the driver given a specific pname enum. There are no official tables to determine if the indexed variants of glGet() can be used with a specified enum, or determine the optimal (lossless) type to use given a specific enum.

Also, the behavior of indexed vs. non-indexed gets & sets is not always clear to new users of the API.

Alternately, perhaps just add some glGet() metadeta API's vs. publishing tables.

5. glGetError()

There is no glSetLastError() API like Win32, making tracing needlessly complex.

Some apps never call it, some call it once per frame, some only call it while creating resources. Some call it thousands of times at init, and never again. I've seen major shipped GL apps with per-frame GL errors. (Is this normal? Does the developer even know?)

6. Can't query key things such as texture targets

(I know some of this is being worked on - thanks Cass!) This makes tracing/snapshotting more complex due to shadowing.

Shadowing deeply interacts with glGetError()'s (we can't update our shadow until we know the call succeeded, which involves a call to glGetError(), which absorbs the context's current GL error requiring even more fancy footwork to not diverge the traced app's view of GL errors).

About the recent talk of getting rid of all glGet()'s: IMO either all state should be queryable (which is almost the case today), or the API should be written with maximum perf and scalability in mind like D3D12/Mantle. The value added by the API is clearly understood in either of these extremes.

Getting rid of glGet()'s will make writing tracers & context snapshotters even trickier.

7. DSA (Direct State Access) API variants are still not standard and used/supported everywhere

DSA can make a huge difference to call overhead in some apps (such as Source1's GL backend). Just get rid of the reliance on global state, please, and make DSA standard for once and for all.

8. Official spec is still not complete in 2014:

The XML spec still lacks strongly typed param information everywhere. For example:

<command>

<proto>void <name>glBindVertexArray</name></proto>

<param><ptype>GLuint</ptype> <name>array</name></param>

<glx type="render" opcode="350"/>

</command>

apitrace's glapi.py is still the only known public, reliable source of this information that I know of:

GlFunction(Void, "glBindVertexArray", [(GLarray, "array")]),

Notice how glapi.py defines the type as "GLarray", while the official spec just has the nondescript "GLuint" type.

Add glGet info() to official spec: Mentioned above. How many values does the pname enum return? What are the optimal types to use to losslessly retrieve the driver's shadow of this state? Is the pname ok to use with the indexed variants?

9. GLSL version of the week hell:

For early versions, the GLSL version may not sync up with the GL version it was first defined in, making things even more needlessly confusing. And this is before you add in things like GLSL extensions (*not* GL extensions). Can be overwhelming to beginners.

10. No equivalent of standard/official D3DX lib+tools for GL:

Texture/pixel format conversion helpers that don't rely on using the driver or a GL context

KTX format interchange hell: The few tools that read/write the KTX format (GL's equivalent of DDS) can't always read/write eachother's files.

Devs just need the equivalent of Direct3D's DXTEX tool, with source.

The KTX examples just show how to load a KTX file into a GL texture. We need tools to convert KTX files to/from other standard formats, such as DDS, PNG, etc.

A GLSL compiler should be part of this lib (just like you can compile HLSL shaders with D3DX).

11. GL extensions are written as diffs vs the official spec

So if you're not a OpenGL Specification Expert it can be extremely difficult to understand some/many extensions.

Related: The official spec is written for too many audiences. Most consumers of the spec will not be experts in parsing it. The spec should be divided up into a developer-friendly spec vs a deeper spec for the driver writers. Extensions should not be pure delta's vs. the spec - who can really understand that?

12. Documentation hell

We've got 20 years of GL API cruft out there that adds noise to Google searching for GL API information, and beginners can get easily tripped up by bad/legacy documentation/examples.

13. MakeCurrent() hell

Can be extremely expensive, hidden extra variable cost with some extensions (I'm looking at you NV bindless texturing!), can crash drivers (or even the GPU!) if called within a glBegin/glEnd bracket, etc.

The behavior and performance of this call needs to be better specified and communicated to devs.

14. Drivers should not crash the GPU or CPU, or lock up when called in undefined ways via the API

Should be obvious by now. Please hire real testers and bang on your drivers!

Better yet: Structure the API to minimize the # of undefined or unsafe patterns that are even possible to express via the API.

15. Object deletion with multiple contexts, cross-context refcounting rules, "zombie" objects:

Good luck if the object being deleted is currently bound on another context.

Trying to call glGet()'s on a deleted object (that is still partially "live" because it's bound or attached somewhere) - behavior can differ between drivers.

All of this is needless overhead/complexity IMO.

Makes 100% reliable snapshotting and restoring GL context state very, very difficult.

I see world-class developers screw this up without knowing it, which is a clear sign that the API and/or tool ecosystem is broken.

16. Shader compiling/program linking hell

Major performance implications to shader compiling/linking.

Tokenized shader programs work. Direct3D is the existence proof that this approach works. The overall amount of pain GLSL has caused developers porting from D3D and end users (due to slow load times) is incredible, yet GL still only accepts textual GLSL shaders.

Performance drastically varies between drivers. Shader compiling can be effectively a no-op on some drivers, but extremely expensive on others.

Program linking can take *huge* amounts of time.

Some drivers cache linked programs, some don't.

Program linking time can be unpredictable: fast if the program is cached, but there's no way to query if the program is already cached or not. Also no way to query if the driver even supports caching.

Some drivers support threaded compilation, some don't. No way to query if the driver supports threaded compilation.

Some drivers just deadlock or have race conditions when you try to exploit threaded compilation.

Just a bad API, making it hard to trace and snapshot: Shaders can be detached after linking. Lots of linked program state is just not queryable at all, requiring link time shadowing by tracers.

Just copy & paste what D3D is doing (again, it works and devs understand it).

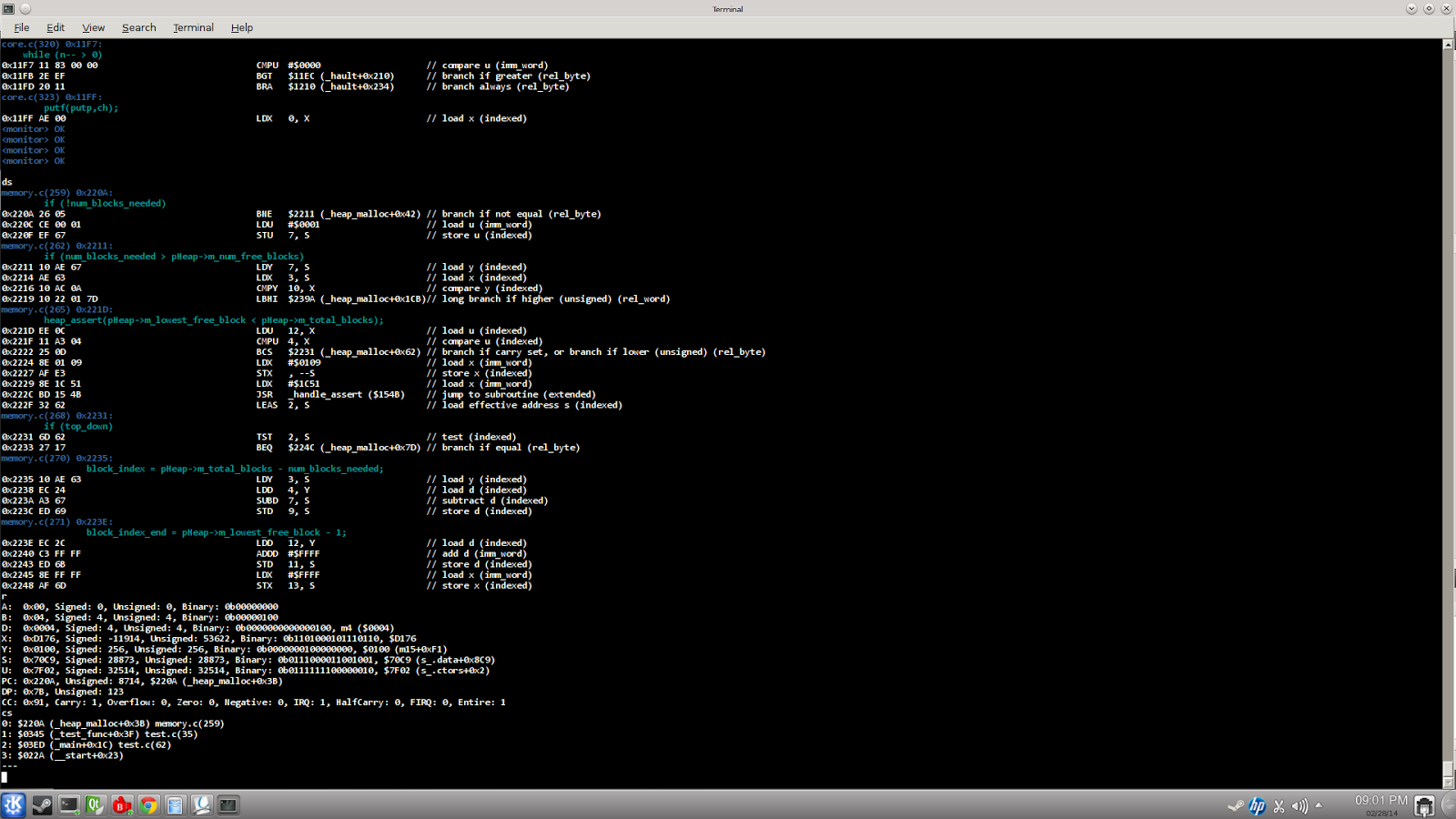

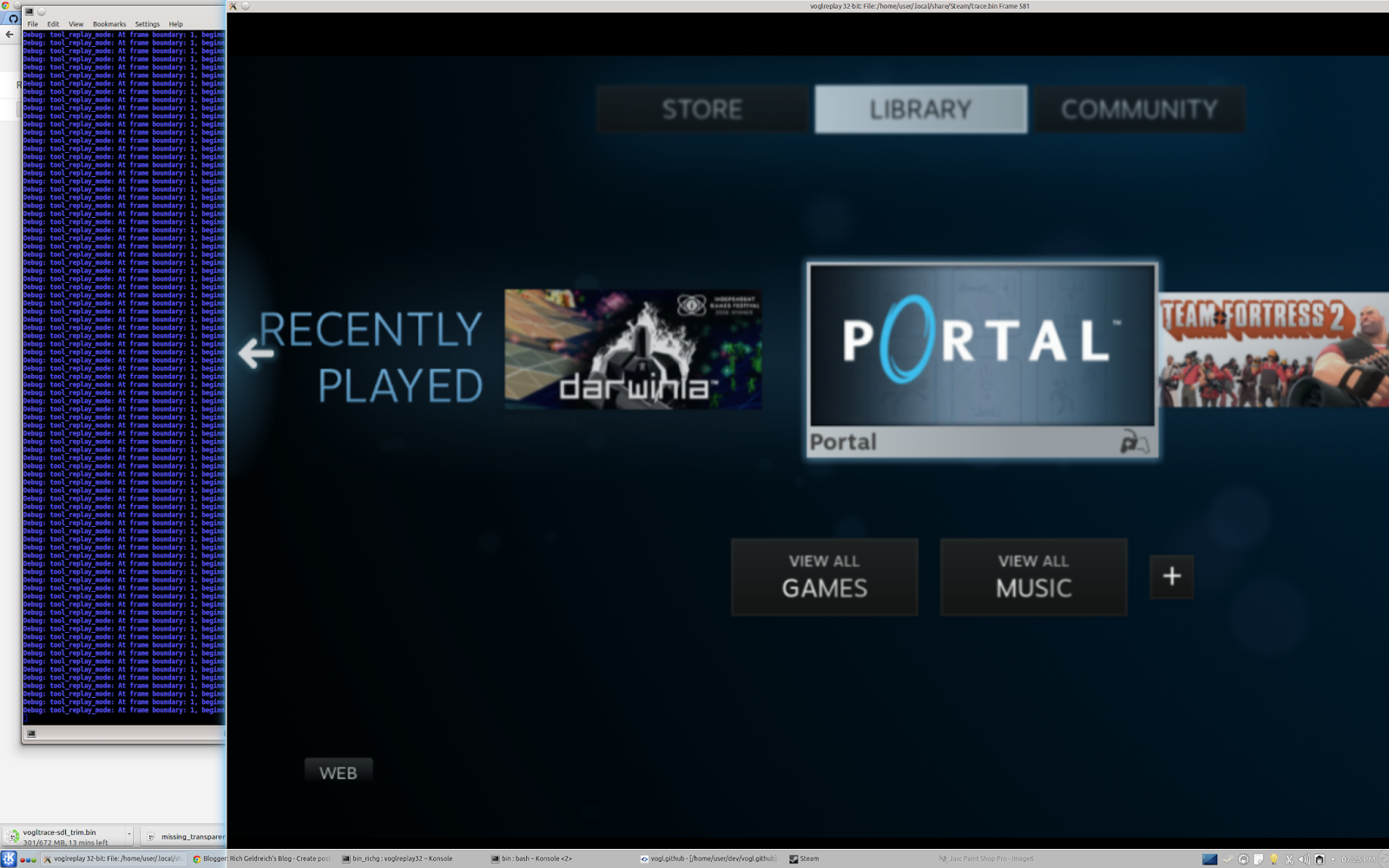

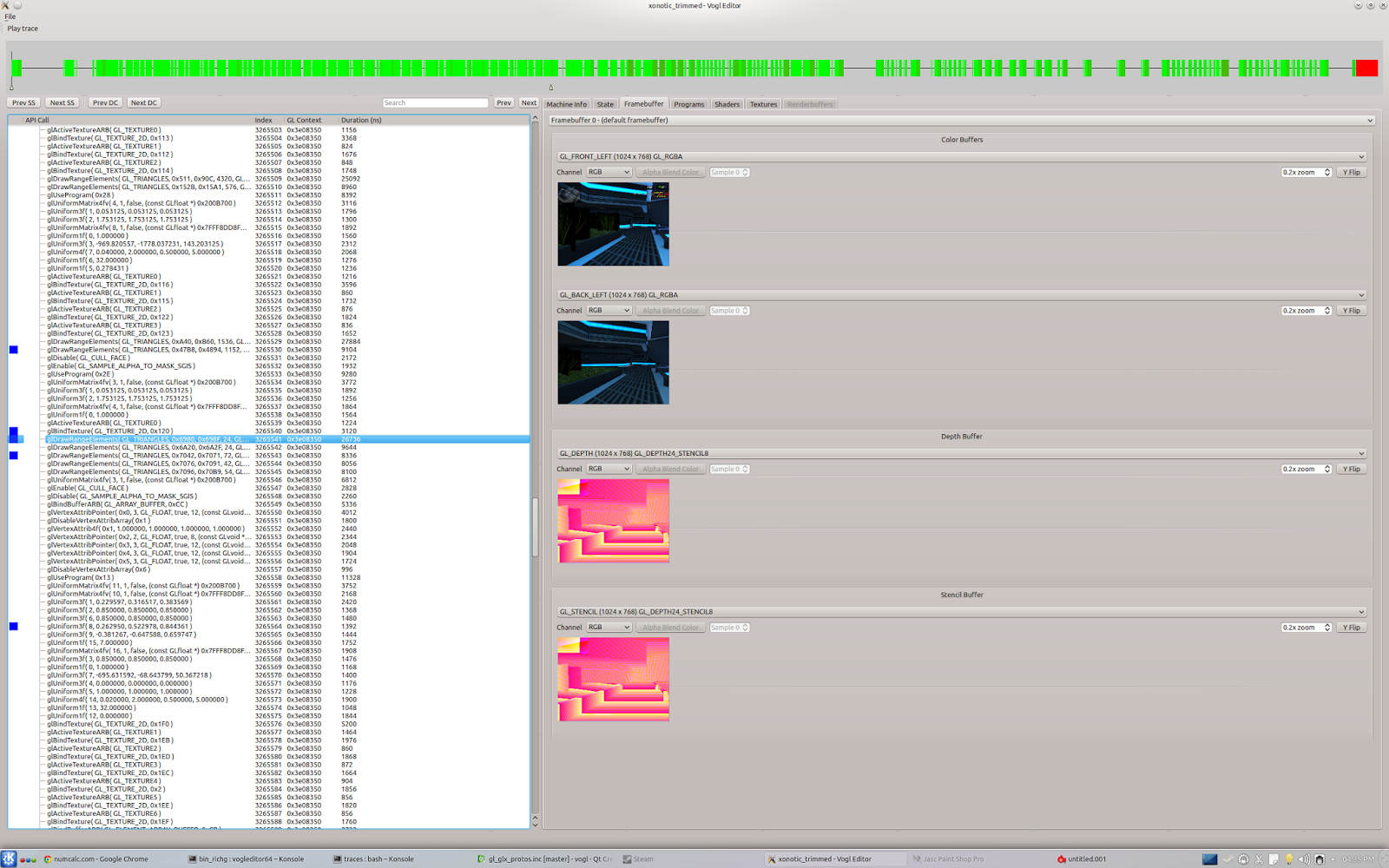

17. Difficult API to trace, replay, and snapshot/restore

Hurts tool ecosystem, ultimately impacts all users of API.

API should either be written to be easily traced/replayed/snapshotted, or incredibly performant/scalable like Mantle/D3D12. Right now GL has none of these properties, putting it in a bad spot from a value proposition perspective.

API authors should focus more on VALUE ADDED and less on how things should work, or how we are different from D3D because we're smarter.

18. Endless maze of GL functions (thousands of them!)

Hey - do we really need dozens of glVertexAttrib variants? Who really even uses this API?

API needs a reboot/simplification pass. Boost the "signal to noise" ratio, please.

19. Legacy complexities around v3.x API transition:

"Forward compatible", "compatibility" vs. "core" profiles etc. etc. etc.

Devs should not have to master this stuff to just use the API to render shaded triangles.

"Core" should not even be in the lexicon.

20. Reliably locking a buffer with DISCARD-semantics on all drivers without stalling the pipeline:

Do you use a map flag? BufferData() with NULL? Both, either, etc.?

What lock flag or flags do you use? Or does the driver just completely ignore the flag?

Trivial in D3D, difficult to do reliably in GL without being an expert or having direct access to driver developers.

This stuff is incredibly important!

Special note to driver developers: What separates the REAL driver devs from wannabees is how well you implement and test stuff like this. Pipeline stalling is not an option in 2014!

21. BufferSubData() stalls when called with "too much" data on threaded drivers

No way to query what "too much" data is. Is it 4k? 8k? 256k?

22. Pipeline stalling

No official (or any) way to get a callback or debug message when the driver decides to throw up its hands and insert a giant pipeline stall into your rendering thread

This can be the #1 source of rendering bottlenecks, yet we still have almost zero tools (or API's to help us build these tools) to track them down

23. Threaded drivers hell

Some manufacturers decide to forceably auto-enable their buggy multithreaded drivers months after titles have been shipped & thoroughly tested by the developer. (And to make matters worse, they do this without informing the developer of the "app profile" change or additions.)

Some multithreaded drivers have buggy glGet()'s when threading is enabled - makes snapshotting a nightmare.

No official way to query or control whether or not the driver will use multithreading.

No way to specify to the driver that a tracer is active which may issue a lot of glGet()'s (that the app would not normally do)

Bone headed threaded drivers that slow to an absolute crawl and stay there when an app or tracer constantly issues glGet()'s (just use a heuristic and automatically turn threading off!)

24. Timestamp queries can stall the pipeline on some drivers

Makes them useless for cross platform, reliable GPU profiling. GL spec should strongly define when the driver is allowed to stall on these queries. Unnecessary stalling should be redefined as a driver bug (by sometimes lazy/incompetent driver developers who don't understand how key these little API's can be).

For reference, NVidia does this stuff correctly. If you are a driver writer working on pipeline query code, please measure your implementation vs. NVidia's driver before bothering to release it.

25. GL is really X different API's (one per driver, sometimes per platform!) masquerading as a single API.

You can't/shouldn't ship a GL product until after you've thoroughly tested for correctness and performance on all drivers (in both threaded and non-threaded modes). You will be surprised at the driver differences. This came as a big shock to me after working for so long with D3D.

This indicates to me that Khronos needs to be more proactive at testing and validating the drivers. GL needs the equivalent of the WHQL process.

26. Extension hell

One of the touted advantages of GL is its support for extensions. I argue that extensions actually harm the API overall, not help it.

I've been through a large amount of major and minor GL callstreams (intricately!) over the previous ~1.5 years. (Before that I was the dev actually making togl work and be shippable on all the drivers. I can't even begin to communicate how difficult and stressful that process was 2+ years ago.) Excluding the driver devs I've probably worked with more real GL callstreams than most GL devs out there. Sadly, in many cases, some to many of the most advanced "modern" extensions barely work yet (and sometimes vendors will even admit this fact publicly). Or, if you try to use a cool-sounding extension you quickly discover that you're pushing a little-used (and tested) path down the driver and the thing is useless for all practical purposes.

From studying and working with the callstreams, it's apparent that devs do a massive MIN() operation across the functionality implemented on the available/targeted drivers. This typically means core profile v3.x, maybe also a 4.x backend with very simple/safe operations. (Or it's a small title that just uses compatiblity GL like it was still 1998 or 2003 - because that's all they need.) They don't bother with most extensions (especially the "modern" ones) because they either don't work reliably (because the driver paths that implement them are not tested on real apps at all - the classic chicken and egg problem), or they are only supported (sometimes barely) by 1 driver, or the value add just isn't there to justify expanding the product testing matrix even more.

Additionally, some of these modern extensions are very difficult to trace, which means that whatever debugging tools employed by the developer aren't compatible with them. So you need a fallback anyway, and

if the devs must implement a fallback they might as well just ship the fallback (which works everywhere) and not worry about the extension (unless it adds a significant amount of value to the product).

So unless it's non-extended GL it might as well not be there to a large number of devs who just want to ship a reliable/working product.