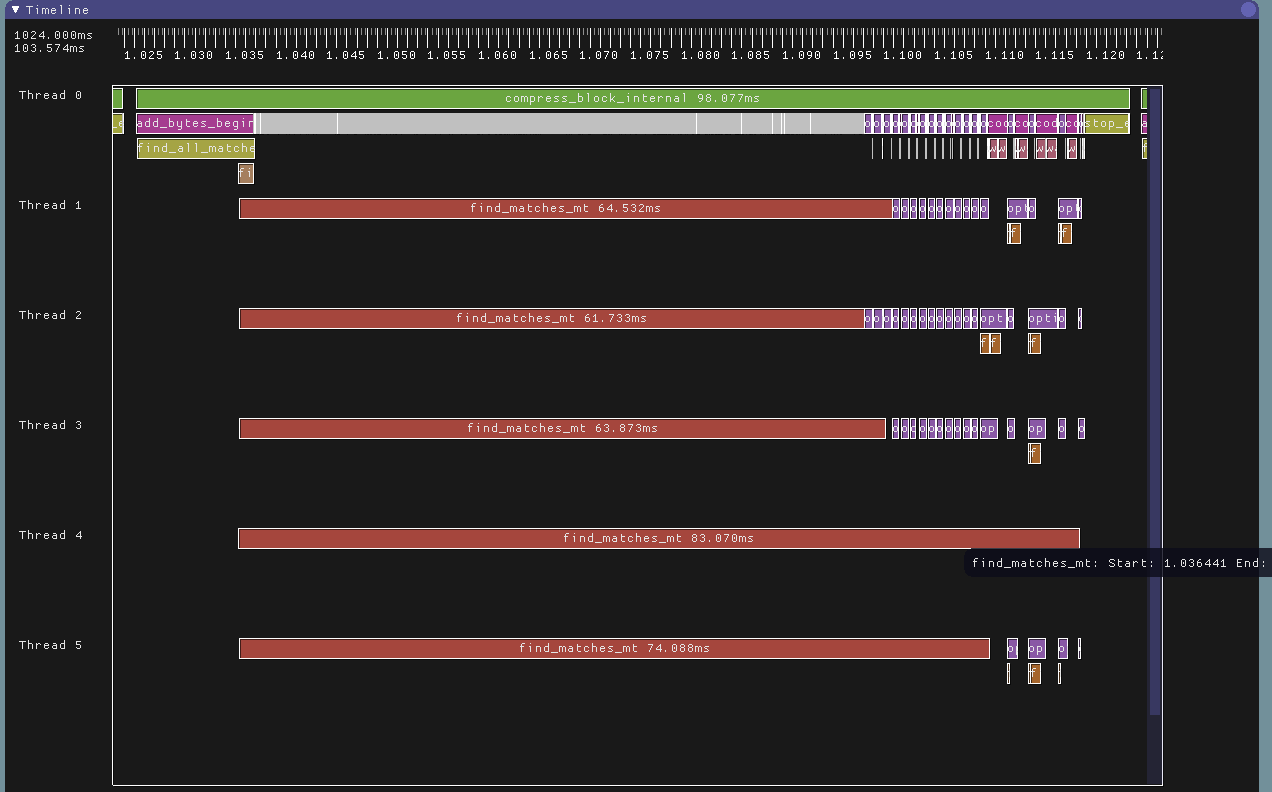

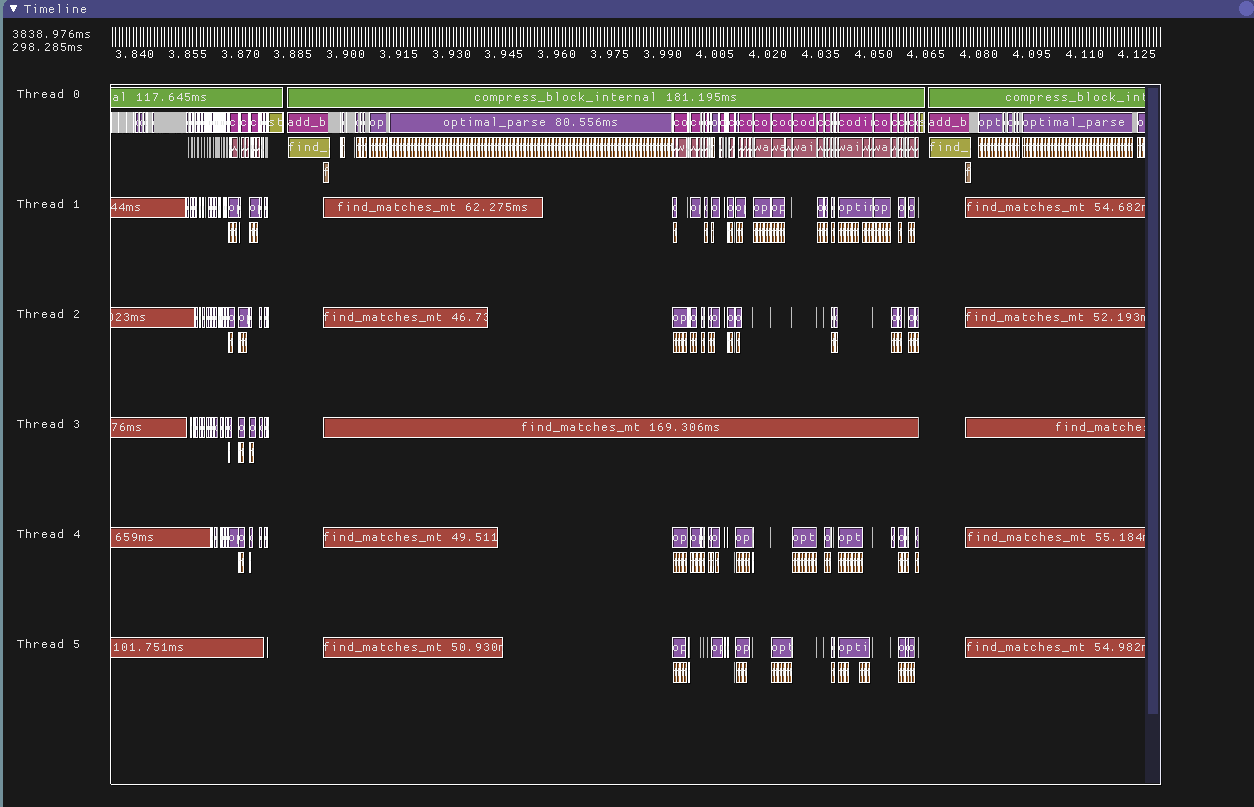

After several man months of tool making, instrumenting and compiling our own

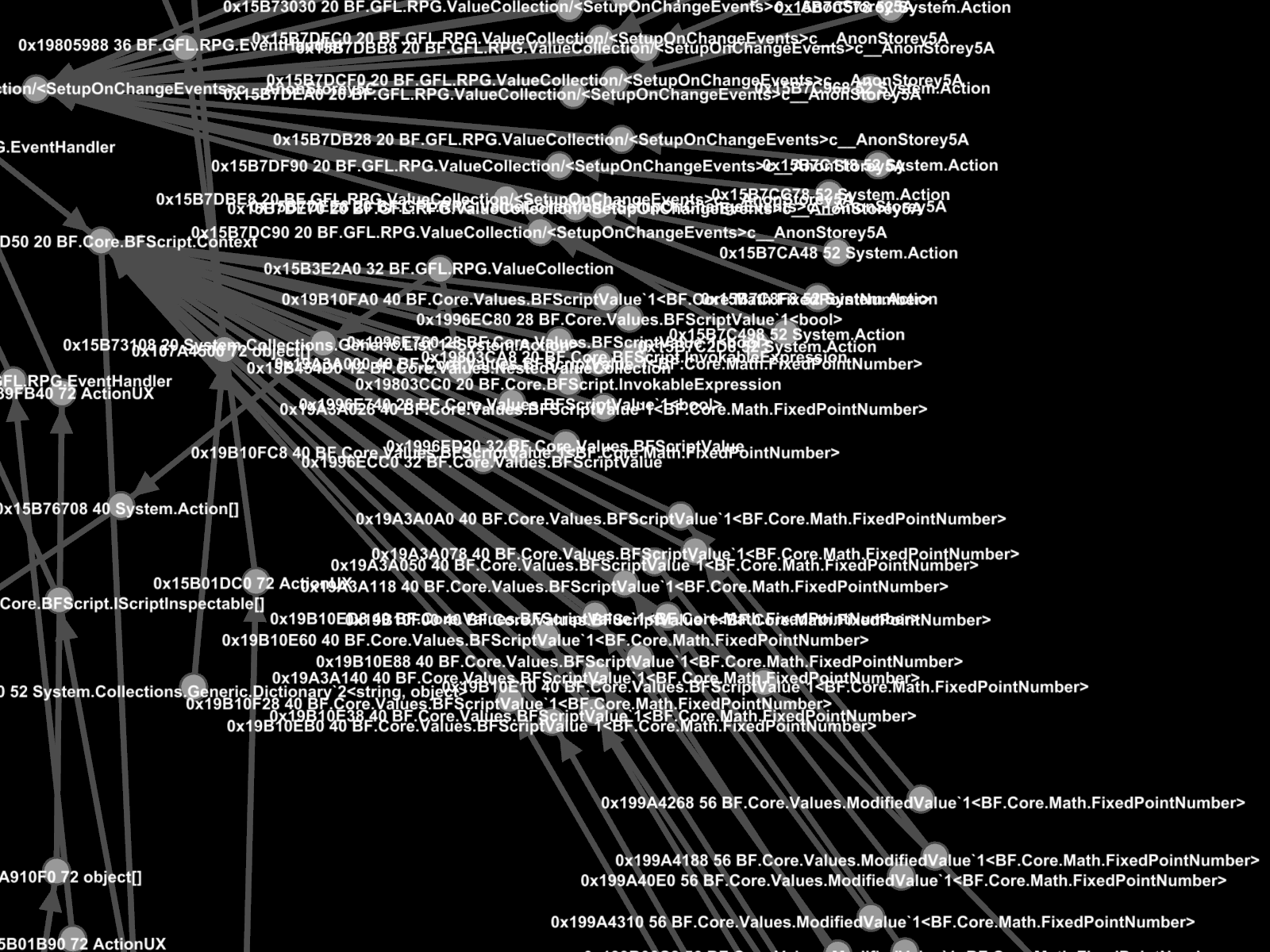

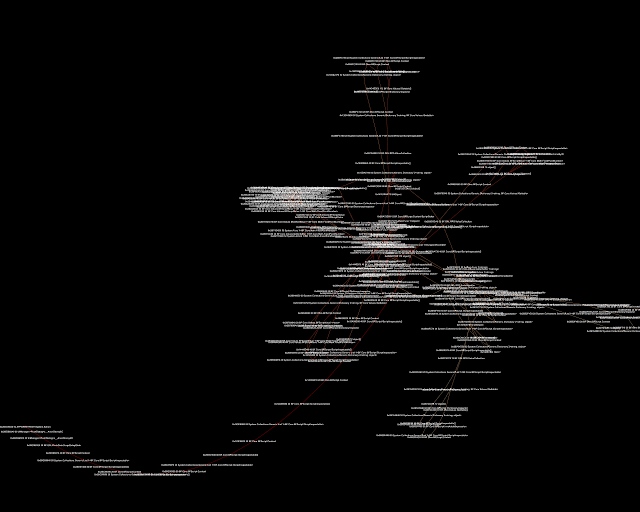

custom Mono DLL, and crawling through 5k-30k node

heap allocation graphs in

gephi, our first Unity title (

Dungeon Boss for iOS/Android) is now no longer leaking significant amounts of Mono heap memory. Last year, our uptime on 512MB iOS devices was 15-20 minutes, now it's hours.

It can be very easy to construct complex systems in C# which have degenerate (continually increasing) memory behavior over time, even though everything else seems fine. We label such systems as "leaking", although they don't actually leak memory in the C/C++ sense. All it takes is a single accidental strong reference somewhere to mistakenly "leak" huge amounts of objects over time. It can be a daunting task (even for the original authors) to discover how to fix a large system written in a garbage collected language so it doesn't leak.

Here's a brain dump of what we learned during the painful process of figuring this out:

- Monitor your Unity app's memory usage

as early as possible during development. Mobile devices have some pretty harsh memory restrictions (see

here to get an idea for iOS), so be sure to test on real devices early and often.

Be prepared for some serious pain if you only develop and test in the Unity editor for months on end.

- On iOS your app will receive

low memory warnings when the system comes under memory pressure. (Note that iOS can be very chatty about issuing these warnings.) It can be helpful to log these warnings to your game's server (along with the amount of used client memory), to help do post-mortem analysis of why your app is randomly dying in the field.

- Our (unofficial) low end iOS devices are iPhone 4/4s and iPad Mini 1st gen (512MB devices). If our total allocated memory (according to XCode's Memory Monitor) exceeds approx. 200MB for sustained periods of time it'll eventually be ruthlessly terminated by the kernel. Ideally, don't use more than 150-170MB on these devices.

- In Unity, the Mono (C#) heap is managed by the

Boehm garbage collector. This is basically a C/C++-style heap with a garbage collector bolted on top of it.

Allocating memory is not cheap in this system. The version of Mono that Unity uses is pretty dated, so if you've been using the Microsoft .NET runtime for C# development then consider yourself horribly spoiled.

Treat the C# heap like a very precious resource, and study what C/C++ programmers do to avoid constantly allocating/freeing blocks such as using custom object pools. Avoid using the heap by preferring C#

struct's vs classes, avoid boxing, use StringBuilder when messing with strings, etc.

- In complex systems written in C# the careful use of

weak references (or containers of weak references) can be extremely useful to avoid creating endless chains of strong object references. We had to switch several systems from strong to weak references in key places to make them stable, and discovering which systems to change can be very tricky.

- Determine up front the exact lifetime of your objects, and exactly when objects should no longer be referenced in your system. Don't just assume the garbage collector will automagically take care of things for you.

- The Boehm collector's OS memory footprint only stabilizes or increases over time, as far as we can tell. This means you should be very careful about allocating large temporary buffers or objects on the Mono heap. Doing so could unnecessarily bump up your Mono's memory footprint, which will decrease the amount of memory "headroom" your app will have iOS. Basically, once Mono grabs OS memory it greedily holds onto it until the end of time, and this memory can't be used for other things such as textures, the Unity C/C++ heap, etc.

- Be very careful about using Unity's

WWW class to download large archives or bundles, because this class may store the downloaded data in the mono heap. This is actually a serious problem for us, because we download compressed Unity asset bundles during the user's first game session and this class was causing our app's mono memory footprint to be increased by 30-40MB. This seriously reduced our app's memory headroom during the user's first session (which in a free to play game is pretty critical to get right).

- The Boehm collector grows its OS memory allocation so it has enough internal heap headroom to avoid collecting too frequently. You must factor this headroom into account when budgeting your C# memory, i.e. if your budget calls for 25MB of C# memory then the actual amount of memory consumed at the OS level will be significantly larger (approximately 40-50MB in our experience).

- It's possible to force the Boehm collector used by Unity to never allocate more than a set amount of OS memory (

see here) by calling GC_set_max_heap_size() very early during app initialization. Note that if you do this and your C# heap leaks your app will eventually just abort once the heap is full.

It may be possible to call this API over time to carefully bump up your app's Mono heap size as needed, but we haven't tried this yet.

- If your app leaks, and you can't figure out how to fix all the leaks, then an alternative solution that may be temporarily acceptable is to relentlessly free up as much memory as possible by optimizing assets, switching from PVRTC 4bpp to 2bbp, lowering sound and music bitrates, etc. This will give your app the memory headroom it needs to run for a reasonable period of time before the OS kills it.

If the user can play 20 levels per hour, and you leak 1MB per level, then you'll need to find 20MB of memory somewhere to run one hour, etc. It can be

far simpler to optimize some textures then track down memory leaks in large C# codebases.

- Design your code to avoid creating tons of temporary objects that trigger frequent collections. One of our menu dialogs was accidently triggering a collection every 2-4 frames on iOS, which was crushing our performance.

- We used the

Daikon Forge UI library. This library has several very serious memory leaks. We'll try to submit these fixes back to the author, but I think the product is now more or less dead (so email me if you would like the fixes).

- Add some debug statistics to your game, along with the usual FPS display, and make sure this stuff works on your target devices:

Current total OS memory allocated (see

here for iOS)

Total Mono heap used and reserved (You can retrieve this from Unity's

Profiler class. Note this class returns all 0's in non-dev builds.)

Total number of GC's so far, number of frames since last GC, or average # of frames and seconds between GC's (you can infer when a GC occurs by monitoring the Mono heap's used size every Update() - when it decreases since the last Update() you can assume a GC has occured sometime recently)

- From a developer's perspective the iOS memory API and tool situation is a ridiculous mess:

http://gamesfromwithin.com/i-want-my-memory-applehttp://liam.flookes.com/wp/2012/05/03/finding-ios-memory/While monitoring our app's memory consumption on iOS, we had to observe and make sense of statistics from XCode's Memory Monitor, from Instruments, from the Mach kernel API's, and from Unity. It can be very difficult to make sense of all this crap.

At the end of the day, we trusted Unity's statistics the most because we understood exactly how these statistics were being computed.

- Instrument your game's key classes to track the # of live objects present in the system at any one time, and display this information somewhere easily visible to developers when running on device. Increment a global static counter in your object's constructor, and decrement in your

C# destructor (this method is automatically called when your object's memory is actually reclaimed by the GC).

- On iOS, don't be shy about using PVRTC 2bpp textures. They look surprisingly good vs. 4bpp, and this format can save you a large amount of memory. We wound up using 2bpp on all textures except for effects and UI sprites.

- The built-in Unity memory profiler works pretty well on iOS over USB. It's not that useful for tracking down narly C# scripting leaks, but it can be invaluable for tracking down asset problems.

- Here's our app's

current memory usage on iOS from late last week. Most of this data is from

Unity's built-in iOS memory profiler.

- Remember that leaks in C# code can propagate downstream and cause apparent leaks on the Unity C/C++ heap or asset leaks.

- It can be helpful to mentally model the mono heap as a complex directed graph, where the nodes are individual allocations and the edges are strong references. Anything directly or indirectly referenced from a static root (either global, or on the stack, etc.) won't be collected. In a large system with many leaks, try not to waste time fixing references to leaf nodes in this graph. Attack the problem as high up near the roots as you possibly can.

On the other hand, if you are under a large amount of time pressure to get a fix in

right now, it can be easier to just fix the worst leaks (in terms of # of bytes leaked per level or whatever) by selectively null'ing out key references to leafier parts of the graph you know shouldn't be growing between levels. We wrote custom tools to help us determine the worst offenders to spend time on, sorted by which function the allocation occurred in. Fixing these leaks can buy you enough time to properly fix the problem.